We live in a digital world, but we are fairly analogue creatures.

Omar Ahmad

Netflix‘s new documentary is a startling exposé of the dangers of the internet, particularly social media. Director Jeff Orlowski works together with a group of experts to reveal the intrinsically predatory nature of our newest technologies. These experts are primarily members of the tech industry, ranging from Tristan Harris, who was a Design Ethicist at Google, to Tim Kendall, Facebook’s former Director of Monetization. Their concerns and interviews are voiced against the backdrop of a dramatized family that is struggling to deal with their technology addictions. Let’s start with a brief review of the film before we analyse the points it raises on the dangers of social media.

Review: 4/5

Something is very wrong with our technology. This is the basic premise behind The Social Dilemma. Jeff Orlowski (you may know him for his films on global warming, Chasing Ice and Chasing Coral) has gathered a rather diverse group of experts who are all worried about the psychological, societal, and financial toll that modern technology is taking on us. Minimalist visuals and dramatic music quickly prime the viewer for the revelation that our friends at Facebook, Google, Twitter, Snapchat, etc. may not be so friendly after all.

The Pros: The editing is commendable – interview snippets, portentous sounding quotes, and the occasional illustrated statistic allow Orlowski to approach very broad concerns one step at a time while making a compelling case. While a lot of the “damaging” material may only be anecdotal, the fact that these concerns are raised by people who left the tech industry on moral grounds speaks for itself.

The Cons: Underlying the interviews is a fictional dramatization of a family coming to grips with its technology addiction. This is a bit of a mixed bag, though I will say that I understood the necessity on a subsequent viewing. While the acting is by no means stellar, it allows us to see the “real world” impact that the experts are warning us about. Particularly jarring is a series of cut scenes portray a personified version of the social media algorithm: how it is continually forcing us to interact with the app while selling advertisements and, most chillingly, creating an ever more lifelike model of us.

The final negative is the paradox the film creates. It warns us of how technology is exploiting our fears to change our behaviour while essentially doing the same thing. The fact that most people will only watch the film because it is recommended by Netflix’s algorithm is a perfect example of the influence the film is warning against.

Critique aside, the documentary is definitely worth watching. A perfect film to introduce us to the dangers of modern technology.

Analysis: What is the problem?

This is the first question raised by Orlowski and simultaneously perhaps the most difficult to answer. The last 20 years have seen an evolution in technology that is unprecedented in human history. A teenager with a smartphone now has access to possibilities of communication and transport that an emperor could never dream of. On the other hand, our moralities and societies have only received small “updates” in the last decades, while our psychology has remained almost unchanged. In other words, we are developing potentially harmful products at a far greater rate than we can possibly regulate them. Many internet platforms have a user-base larger than the population of most countries (Facebook alone has 2.7 BILLION users), yet their digital and international nature means that they often face less regulation than a roadside lemonade stand. At the same time, the digital world is having a greater influence on our real lives. As Tristan Harris from the Center for Humane Technology says,

“Never before in history have 50 designers, 20 to 35 year-old white guys, in California, made decisions that would have an impact on 2 billion people. 2 billion people will have thoughts that they didn’t intend to have because a designer at Google said that this is how notifications work on that screen that you wake up to in the morning.”

This is perhaps the heart of the matter: our technology is rapidly dominating our day-to-day lives. Internet platforms are having deep and concerning effects on the psychological, social, and even political levels. Most alarmingly, these platforms are controlled by a tiny group of developers and they are using a business model that promotes the collection of personal data and exploitative psychology. In other words, this technology is dangerous because of how we use it, the effects it can have in the real world, and the people controlling it.

Am I addicted?

“Do you look at your phone before you pee in the morning or during?”

How strange that many of us can only decide between these two alternatives. The internet has deeply integrated itself into modern life, and smartphones allow us to have the internet available every second of the day. It sometimes feels almost impossible to put that little screen down, and that’s no coincidence: our technology is designed to be as addictive as possible.

We think of technology as a tool, something which allows us to be more effective. We use a tool to save time. Social media is not designed to save us time, but to use our time. It is engineered for us to use it, and every app on your phone and computer is fighting for a larger share of your attention. These platforms are continually tweaking their look to see what works and how they can influence us to use their product more. When the brain is positively stimulated it releases dopamine, the feel-good hormone. Much like a slot machine at a casino, these apps use happy chimes, flashing lights, and other effects to give us a hit of dopamine. We crave another hit, so we open the app to see what’s new.

This is where The Social Dilemma shines, by allowing the designers to explain how our social media came to be. They unravel how they engineer psychology into the technology itself, playing off our deep-seated needs for social approval, our curiosity, our fears, and our anger. Photo-tagging forces us to view the photo because we need to know if a potentially compromising photo has been published. Push notifications “helpfully” alert us to new content, but in reality they are just a façade to get us to open the app again. Infinite scrolling is essentially a slot machine: every time we reload the page, something new appears, and maybe, just maybe, the new content is interesting and gives us that dopamine hit. The inventor of the feature, Aza Raskin, ironically talks about how he had to write his own software to externally limit the time he spent on Reddit. This reflects an unfortunate feature of human psychology, that we cannot change our desires even when we are aware of the unhealthy influence. As Sean Parker, a former President at Facebook, says, “It’s exactly the kind of thing a hacker like myself would come up with, because you are exploiting a vulnerability in human psychology.”

These are intelligent, mature people who struggle to stop using their own creations. How much more dangerous are they for children and teenagers, who are still developing mentally? These new apps and features are not without risks, and even the most well-meaning ideas can lead to disastrous results. When the “Like” was released, it was meant to be a means of spreading positivity. Instead, likes (and especially the later “dislike”) became a simple metric to compare yourself to others. It is no secret that young people, particularly teenage girls, often feel pressure to taking revealing pictures of themselves simply to get more likes.

This problem is compounded by data collection. As the AI behind our technology becomes more advanced, it can exploit these vulnerabilities more efficiently. As Harris says, we are holding up a mirror to ourselves and using that information to exploit ourselves better.

Ignore it, it’s just online

The internet is often an unforgiving place. The anonymity and physical distance allow us to forget that we are dealing with real people with real feelings. Trolling and cyber-bullying have become serious problems, and shutting down your computer is not enough to protect you. The youth is particularly integrated in the digital world, and to exclude yourself from a specific platform is to distance yourself from your peers. Orlowski paints an alarming picture of how digital media is negatively affecting us, particularly the youth. Using data gathered from the US Center for Disease Control, we see that hospitalizations due to self-harm have skyrocketed from 2010. (62% increase in females aged 15-19, and 189% in females aged 10-14).

These two groups have also seen a marked upturn in suicide since 2009 of 70% and 151% respectively.

The use of filters in photo-sharing apps has given rise to “Snapchat Dysmorphia”, which leads many to receive plastic surgery so that they can look like their doctored photos.

Putting the social in social media

While you can argue that social media may not affect you as an individual, we can no longer ignore the effect it is having on our society. From Russian hackers and Cambridge Analytica pushing Trump’s election in 2016 to the Arab Spring revolutions in 2010 and 2012, we see that social media is being exploited to affect real political change. What was once foreign propaganda is now domestic fake news, a collection of dubious facts and half-truths prefaced by a click-bait title. Many of these sites are pushing a specific agenda, many others are just fabricating stories purely to generate views, which in turn generate ad revenue. These are some of the challenges facing modern journalism, both for consumers and publishers.

Reliability: Being first is more profitable than being right. There are hordes of websites and “news outlets” looking to generate clicks. Whereas old media needed you to believe them (to ensure that you would continue to buy their newspapers), new media often just looks for that first click. They don’t care if you even read the article, as long as you see their adverts.

Speaking of reliability, we are also seeing a truly bizarre consequence of fading trust in old media. As people start to mistrust the big news centers, they are happy to believe the conspiracy theories of real people on social media, especially if the article is shared by someone they know and trust. The fact that these “real people” are as fake as their accounts seems to worry no one.

Echo chambers: News feed algorithms are designed to supply you with the content you tend to engage with. Have you ever noticed how you find a flurry of content agreeing with your current stance? You wonder how people can believe anything else when there is so much information verifying your viewpoint? Are those people on the “other side” not seeing all this? No. They are not. Instead, they are seeing a mountain of content agreeing with their viewpoint and disproving yours. This is an echo chamber, a digital room where we only see the thoughts and views we share. There isn’t even necessarily malicious intent behind it: the AI just follows what you view and supplies you with it.

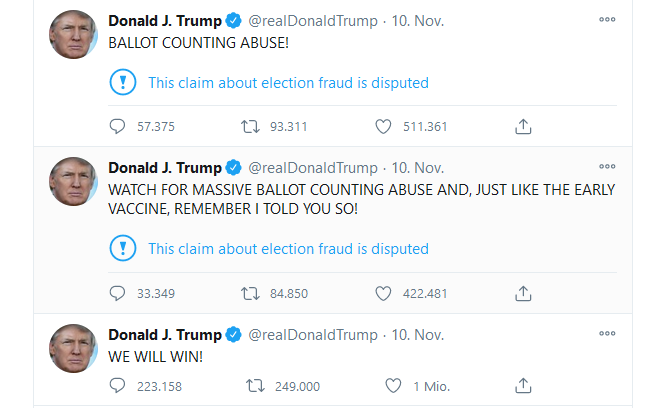

Outrage farming: The flip side of this coin is what I would call outrage farming. Assume you believe strongly in animal rights. A headline about saving koalas from a bush fire will generally garner a like. A video of a cow escaping a slaughterhouse will elicit a view. But a headline about Japanese whalers hunting endangered species? How can this be? You have to see this. Infuriated, you lash out in the comment section and share this unbelievable wrong with all your friends. Is the story true? Irrelevant. The computer chose it for you because it knows that anger and rage will cause you to interact with the platform for a longer period and more intensely than anything else. On the one hand, you have a host of information agreeing with your world view, on the other, you have content demonizing those who agree with you. It is no wonder that we struggle to find common ground for a meaningful discussion. In the 2020 US election, both Twitter and Facebook attempted to combat the flow of misinformation by flagging posts claiming results as premature and posts which made unverified accusations against the election process. Is this actually a good thing? We are effectively allowing a company to become our Thought Police, and we have no idea WHO is actually sitting behind the keyboard.

This brings us to our final point…

Growing by leaps and bounds

On February 4, 2004 Mark Zuckerberg and his college roommates set up an online student directory for Harvard Students called The Face Book. 14 years later, Congress is holding Facebook and Zuckerberg accountable for influencing the presidential election. How did we get here?

Neil Armstrong described walking on the moon as a giant leap for mankind. If that’s true, then Facebook and other internet platforms represent a rocket-propelled flight, potentially off a cliff. How can we expect a young programmer to defend democracy? Even the most responsible developer can become overwhelmed when his company triples in worth every week, yet we believe these companies when they claim to respect our privacy.

The effects of social media are only amplified by how quickly new technology can spread. As a rule, technology has a habit of propagating its own use before the side effects are fully understood. X-rays were invented in 1895, the first death due to overexposure occurred in 1904. People were ignoring the effects of x-ray overexposure well into the 1950s, a litany of painful and obvious effects such as blistering and hair loss as well as the heightened cancer rates. Radium was being used in glow-in-the-dark paint, make-up, and even “vitamin” tablets for almost 40 years before legislators accepted the link between radium paint and factory workers losing their teeth and dying. Why do I mention these chilling examples? I merely wish to point out how it can take up to 50 years for legislation to catch up to the danger of a new and potentially deadly technology. After a string of controversial data leaks and privacy issues, Facebook still boasts 2.7 billion monthly users. Even if only half of the accounts correspond to actual users, Facebook alone is currently “affecting” close to 17% of the entire global population.

What should we do?

Orlowski ends his film with a call to action. While the viewer is probably planning to defenestrate his phone by now, most of the experts still believe that social media can be a tool for good. Their solution is tighter regulation, particularly with regards to protecting children. I don’t think anyone disagrees that we need a strict set of rules going forward, but the question is what these laws should be. A possible start is a data tax: a financial incentive to stop gathering ALL of our private data. But this is just a start. The international nature of the internet and these tech companies only exacerbates the difficulties in finding a unified solution. Maybe the theme of Orlowskis’s next documentary?